Databricks

Operational Intelligence, Engineered on Databricks

Databricks unifies analytics, ML, streaming, and AI on a single Lakehouse platform. But most enterprises stop at dashboards and pipelines without turning the Lakehouse into an AI-native execution fabric that reduces cost-to-serve, accelerates revenue realization, and powers closed-loop operations.

iOPEX goes beyond implementation. We operationalize Databricks by aligning our agentic intelligence for ServiceOps

and RevOps with Databricks telemetry, so signals become actions, and workflows become self-improving operational systems.

15-30%

compute cost reduction

20-40%

pipeline performance improvement

30-50%

faster model-to-production cycles

MTTR ↓ | SLA ↑

pre-built agents

The Shift

From implementation to sustained execution.

Why Lakehouse value stallsCommon realities after go-live

Build-first adoption: Pipelines and models ship but operational outcomes don’t move.

Data and process debt compounds: Optimization, lineage, and governance gaps create rework, slower delivery, and audit risk.

Cost-to-serve sAI remains “insight-only”: Signals don’t translate into next actions; teams stay manual and reactive.

Cost-to-serve scales linearly: Compute inefficiency and human effort rise with volume and complexity.

The iOPEX solution: Lakehouse as an execution fabric

Operate the platform end-to-end: We run Delta, streaming, ML/GenAI, and Unity Catalog with continuous optimization, not periodic cleanups.

Embed operational intelligence: Databricks becomes the sensing layer for Command Agents triggering predictive remediation, revenue leakage detection, and automated orchestration.

Services-as-Software economics: We engineer for measurable outcomes where effort and cost per transaction decline quarter-over-quarter.

Outcomes we enable

Lower cost-to-serve through AI-led automation and workload optimization

Predictive incident detection and proactive remediation using telemetry along with ML signals

Agentic execution: command agents that act (with human-in-the-loop assurance)

Revenue acceleration via usage analytics, renewal intelligence, and leakage prevention

Closed-loop service case orchestration (Sense → Decide → Act → Learn)

Reduced data and process debt via governance, lineage, and operating discipline

How We Deliver

A delivery model built for outcomes

01

Stabilize performance and reliability

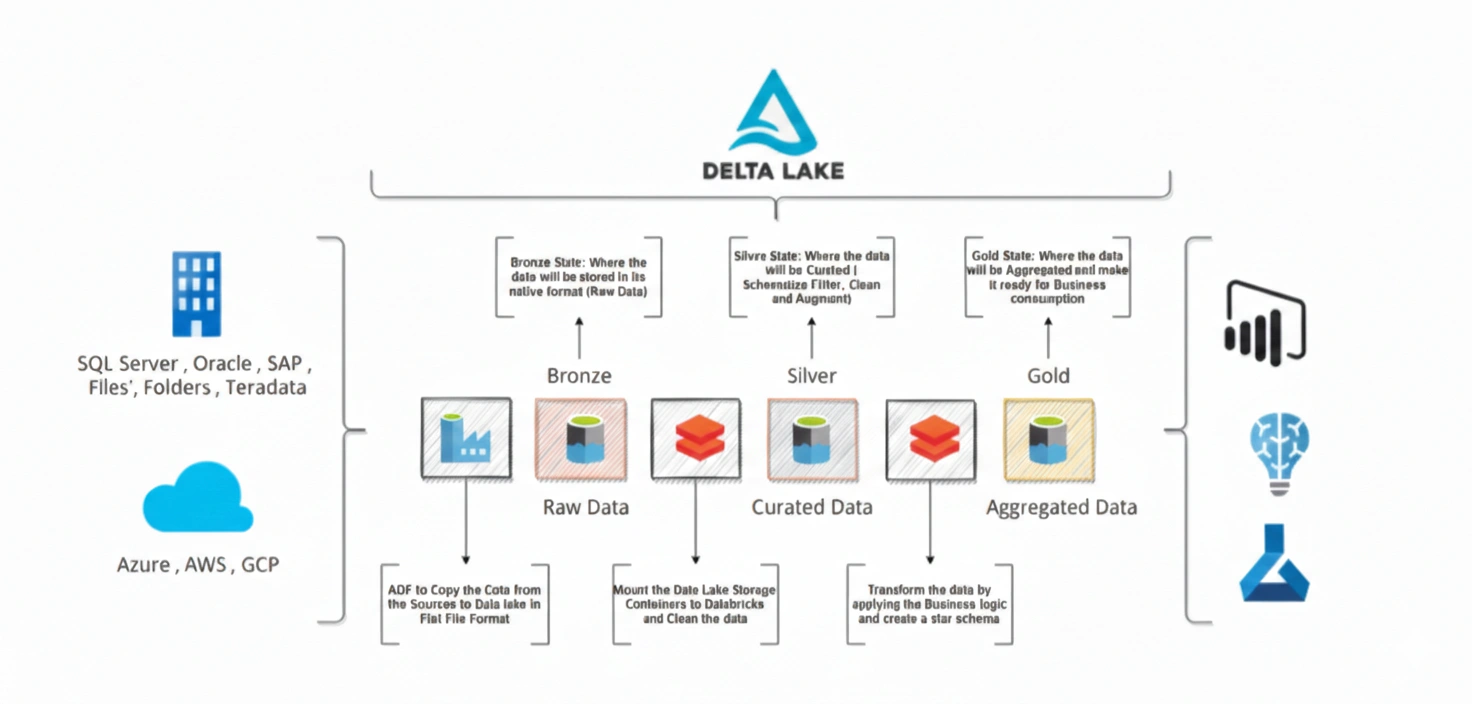

Real-time ingestion, medallion architecture, Delta optimization, streaming hardening

Outcome: faster, more reliable pipelines and reduced operational firefighting

02

Make governance audit-ready by design

Unity Catalog, lineage, RBAC/ABAC enforcement, MDM controls

Outcome: reduced rework, better trust in data, faster compliance response

03

Accelerate ML/GenAI to production

MLflow lifecycle, feature store, RAG pipelines, monitored deployments

Outcome: shorter model-to-production cycle and higher adoption with guardrails

04

Activate agentic execution on telemetry

Command Agents consume Databricks signals to trigger next-best-action and orchestration

Outcome: MTTR down, SLA up, fewer manual handoffs, closed-loop operations

05

Drive FinOps and “declining effort” economics

Cluster sizing, workload isolation, auto-termination, scheduling policies

Outcome: compute cost reduction and sustained cost-per-transaction decline

Ready to explore?